Update: Since first writing this piece in 2017, Facebook launched 3d photos using the depth data from the iPhone X portrait camera. Their implementation is fantastic - I love the subtle pan and zoom effect as you scroll by them, and of course the accelerometer-based peeking left/right. I'm surprised more platforms haven't made these a standard because they're just magical. Note also I've updated some of the images from the original article to better illustrate concepts.

WWDC is rumored to be pretty exciting this year. As with every year, the week before has been filled with lots of wish lists and guesses from enthusiasts around what could be in the latest iOS release. I’ve compiled my own, but I realized one thing I think is most interesting is unlikely to be announced at WWDC, because it’s tied to new hardware.

The hardware in question is dual front- and rear-facing cameras rumored to be on the next iPhone (that’s f-o-u-r cameras, folks). The existence of hardware isn’t enough to sell phones though — its the new use cases are enabled by the hardware that get people lining up on day one.

In case it wasn’t already apparent, the last couple years have ushered in a golden age of cameras as a platform. Far beyond mere photos and video, cameras are enabling computers to understand the world around us, and helping us capture, reconstruct, and remix the world. Through the iPhone, Apple has been one of the enablers of this golden age, and if their acquisitions and product roadmap hints the last few years are any indication, they will continue to be the driving force for years to come.

Below are a few thoughts on camera-related features that won’t be announced at WWDC, but that I hope we see soon — maybe even in Fall.

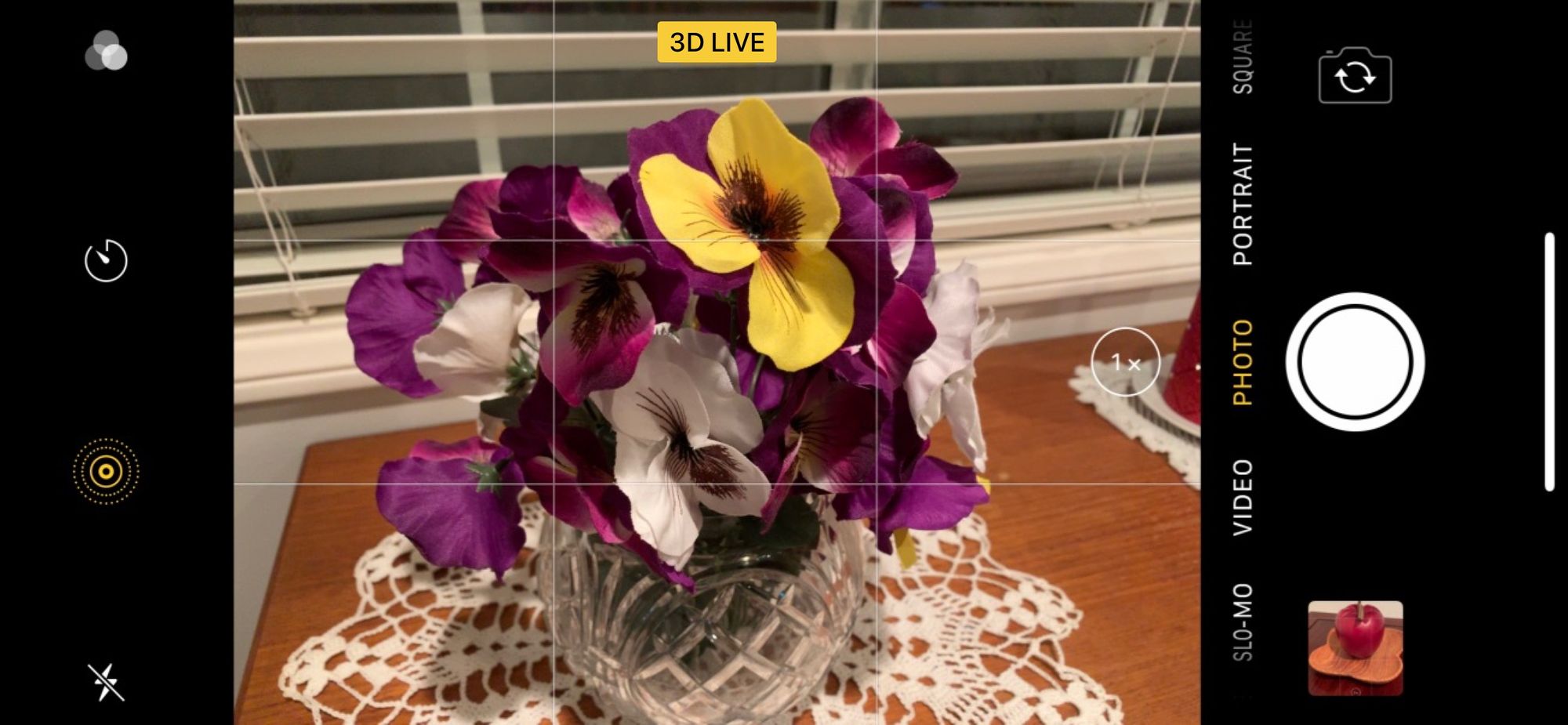

3D Photos

Imagine if the photos you looked at on your device were to suddenly gain depth — that when you rotated the device ever so slightly, the face you were just looking at head-on also rotated. 3D Photos is basically that.

3D images and stereoscopic cameras are not a new thing. However, 3D content is typically consumed using 3D glasses or VR headsets, which present each eye with different image which your brain interprets as having depth. 3D Photos is about taking a different approach to consumption, making flat photos come to life by having the device, not your brain, reconstruct the depth of the object.

Viewing 3D Photos within Photos would be easy — likely just by rotating the device around, like you can with panoramas and 360 photos/videos on Facebook. As a secondary way to trigger it, the user could 3D Touch on the photo then slowly swipe left or right — an expansion on how Live Photos work now.

Done right, I think 3D Photos are a flagship feature. 3D Photos would have limited range of 3D effect, but wouldn’t need much to create the sense of depth. Even a few degrees of difference would be enough for our brains to make much more meaning from the photos, for humans (or still life) to emerge from flat pieces of glass.

Of course, having very small interaxial separation between the two cameras means the 3D effect would be limited, and there would be limitations on the optimal distance subjects needed to be away from the cameras. I’m not sure if the interaxial separation is enough on the upcoming iPhone to make good-looking 3D Photos, but lets assume it is.

Rear Camera for Landscape, Front Camera for Portrait

Currently, the iPhone 7S Plus has dual rear-facing cameras oriented horizontally, meaning that getting a 3D effect by rotating left and right would only be possible for portrait shots. The new iPhone is rumored to move the cameras vertically, meaning that 3D Photos could be enabled for landscape shots, which makes much more sense for the rear camera. The different focal lengths of the rear camera are very likely to remain as they enable zoom, however it may be possible to get a 3D Photo effect through interpolation (similar to how interpolation is used in zoom) and having a minimum distance for 3D Photo effects to work — say maybe 5 feet, otherwise the telephoto camera is too zoomed into the subject and 3D Photos are auto-disabled.

Meanwhile, the new iPhone is rumored to have dual front-facing cameras oriented horizontally, which fits for the use case of portrait 3D Photo selfies. Unlike the rear cameras, having different focal lengths doesn’t make much sense on front-facing cameras — it makes much more sense to have those cameras at the same focal length, and use the binocular separation to be able to create 3D Photo selfies at a closer distance.

Just look at how Snap and Instagram have made looping video a thing — 3D Photos are the next logical step. I think this feature will produce a really amazing effect and will have to be seen to be believed. It will give iDevices a whole new depth, first hinted at in the parallax effect introduced in iOS 7.

Gaze Scrolling

Gaze tracking is the logical next input method to add after keyboard, mouse, touch, and voice. There’s many benefits to using the eyes for certain input tasks, not least of which is it doesn’t involve moving or saying anything. Apple is likely already working to perfect gaze tracking, as it is pretty much necessary for AR to work seamlessly. If the upcoming iPhone includes a front-facing infrared light, it’s likely a limited version of gaze tracking can be launched even before Apple’s AR product arrives.

Scrolling is the most obvious use case I think a first version of gaze tracking would work well for. So much of iOS is driven by vertical scrolling, and prolonged use can contribute to repetitive stress issues in your hands. I’d imagine scrolling is the single most used touch action on iPhones, before even button presses. Seems a good place to optimize.

The best way I can think to implement gaze scrolling is to trigger it by looking at the very top or bottom of the screen for a fixed time of about second or so, at which point you would get a haptic vibration and the screen would scroll. Gazing in the scroll area for an extended period of time would reduce the time before triggering scrolling again, providing a functional analog to the touch-based scroll acceleration effect.

Limited gaze tracking also fits in well with the upcoming nearly edge-to-edge iPhone design. This new iPhone has an even more vertical aspect ratio, which should offer some extra room for visual feedback when the scroll action timer gets triggered by your gaze — perhaps a slow fade at the bottom or top of the screen that grows brighter until scrolling is triggered.

As far as implementing it goes, using the gaze tracker only at the edges means it would need less accuracy and calibration[1] could be confined to one dimension. The feature could be disabled when the user is outside of range, which wouldn’t be too big a problem considering you can just hold the iPhone a bit closer to your face — much easier than the MacBook/iPad use case where you’d need to move your whole body to find the sweet spot. I currently have Tobii’s Eye Tracker 4C, which exhibits this annoying property.

Unfortunately, gaze tracking is rather difficult to implement accurately, and will not work for everyone — obviously the blind, but also users with astigmatism, amblyopia, strabismus, and probably thick or dirty glasses. Further, the whole UI paradigm of iOS is built on touch as the primary input source, and it doesn’t make sense to change that — using gaze tracking as a primary input method is best left for AR. Therefore, it’s best to start by implementing gaze tracking as a supplementary input method like 3D Touch was, strategically incorporating it into certain optional areas in iOS. These areas will also need to be ones where any accuracy limitations will not be too pronounced.

I’m personally excited for it because I’ve spent the last couple years overcoming an injury that made prolonged use of my hands a bit difficult. Gaze tracking is on the cusp of being useful to everyone (and life changing to some) and as much as I’d like it on Mac or iPad, if I were an Apple PM I’d make the call to put it on the iPhone first — it just makes so much sense in how we use the device, even in a limited form.

If accuracy is good, of course, I’d hope there would be more broad gaze tracking built into other parts of iOS, which is rumored to be going through a big redesign this year.

CameraKit and Raise-To-Shoot

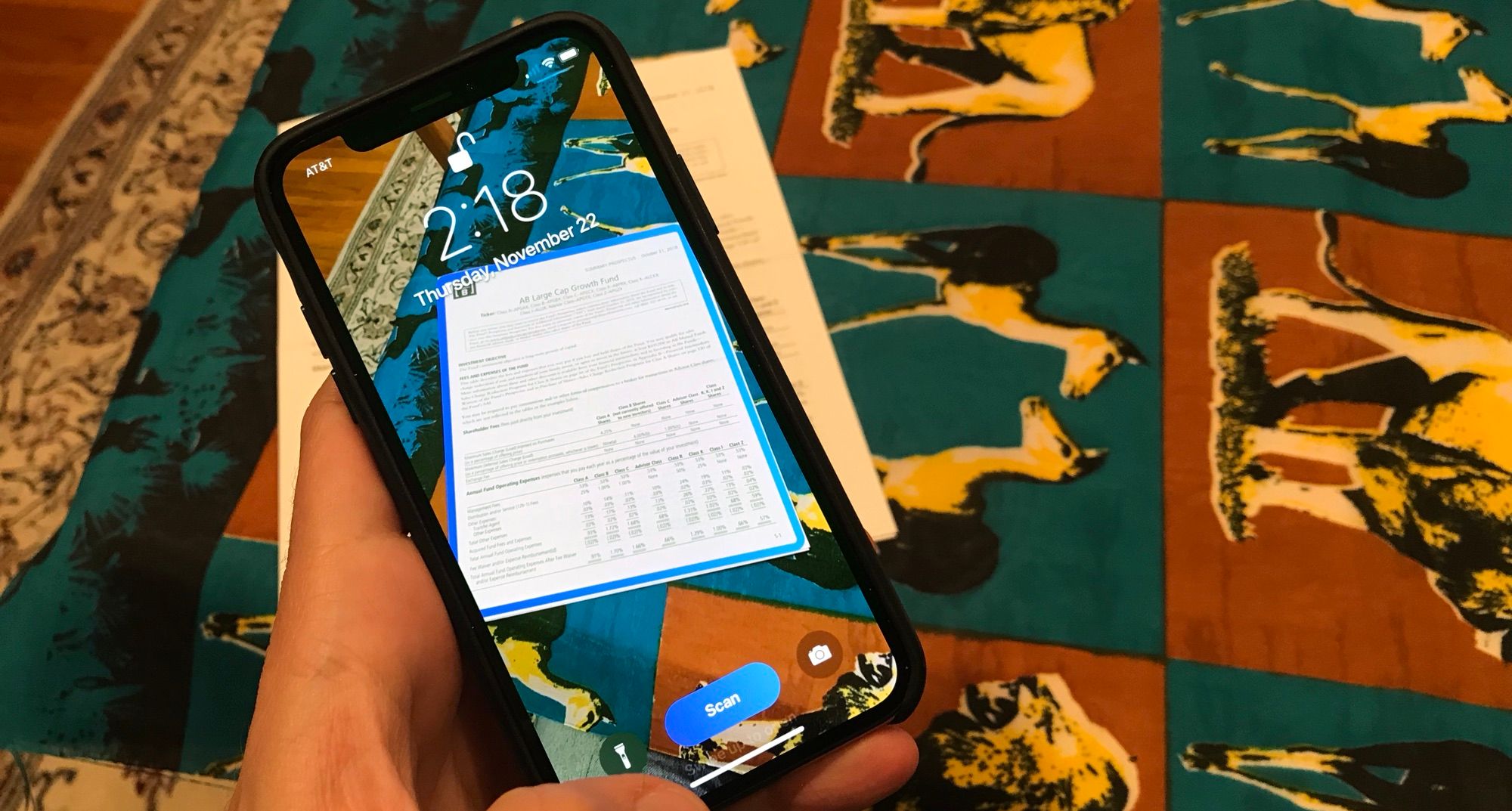

The camera is a platform, not just an app. There’s all sorts of use cases that come through object detection and information overlay on cameras — from scanning pieces of paper like receipts (ScanBot) to looking up information on where you are (Yelp) to translating text (Word Lens), to simply taking pictures of someone who’s smiling back at you. Right now each one of these is gated behind different apps, and their data isn’t always appropriate as part of the Photos stream.

CameraKit would be something like SiriKit, where developers can register certain use cases (identifying points of interest through visuals and GPS, detecting and scanning paper, detecting words and overlaying content), and those detectors are run in the background when the camera is running. The user would be shown relevant overlays and actions they could take based on what they were pointing their device at. Looks like a receipt? The scan button appears, and when pressed the scan overlay comes up and auto-shoots when you’ve got it positioned right. Looks like some text in Thai that you can’t read? The translate button appears, and pressing it drops the translation over. Looks like a person smiling back at you? Well, just have it take the picture automatically, or wink at the front-facing gaze tracker.

This is really powerful when coupled with a lower friction way to access the camera. In iOS 10, Apple added Raise-to-Wake, which automatically turned on and off the phone when it detected you picked it up. This immediately opened up the lock screen as a whole other interface in iOS for certain tasks, and was a brilliant addition. It’s probably time to go the next step and simply have the camera enabled when this happens, looking for relevant objects and presenting relevant actions upon detection — similar to how iBeacons show you relevant apps like the Apple Store app when you walk into an Apple Store.

To go one step further, the lock screen could simply have the camera stream be its background, making the device almost transparent.

To make this transparency effect work, the rear camera would need to be set at the right zoom level and presenting the right part of the frame based on where the user was looking from — which could be calibrated by detecting the user’s face and its distance away from the device via the front-facing cameras.[2]

Conclusion

Even if Apple is working on 3D Photos and Gaze Scrolling, I highly doubt they’ll drop any hints about them at tomorrow’s WWDC. There’s a decent chance we’ll see something like a CameraKit emerge, coupled with some advances to Photos ability to classify things.

Overall, I’m really excited about the opportunities that come from stereoscopic cameras and object detection. As I said at the beginning, I think we’ve entered a golden age of cameras. To really appreciate what that means we have to let go of our notions of how we’ve always used cameras and imagine them as being integral to the device experience, always-on, understanding what we’re looking at and what we’re doing on the device. Their output will occasionally be photos and videos, but mostly they’ll be another context sensor inferring what we want and helping us navigate and remix the world.

Calibration is a big deal with gaze tracking, as there's drift over time. It might be the most important technical part to get right. ↩︎

Yes this would be hard but the effect would be nothing less than magical. From a product strategy perspective, this type of trick relies on great hardware+software integration and would be just the kind of cool feature that separates Apple products from the competition.

The image output would require some perspective transformations, and as you looked at different parts of the screen it would need to alter that transformation. Hard to say whether this effect would look good or not, probably depends a lot on accuracy/calibration. This may require a greater degree of gaze tracking accuracy than other use cases. That said, it doesn't seem like it would need to be close to perfect to deliver a compelling experience. ↩︎